How I tripled my AI accuracy in 2025 (using Lenny’s prompt engineering playbook)

When mastering AI, the best way to start is by learning how to prompt. A single well-crafted prompt can transform a mediocre result into something awe-inspiring.

While I was jogging the other day, I tuned into Lenny’s podcast on prompt engineering and realized that most online guides are years behind.

I was stunned by those misconceptions of overrated prompting techniques that everyone knows about, but don’t actually work in 2025.

From then on, I changed how I prompt.

I want to share how I tripled my AI accuracy this year by using Lenny’s prompt engineering playbook–with examples.

We’ll cover:

- outdated techniques you should stop using

- most effective prompting techniques that change everything

- bonus cheat sheets to supercharge your prompts

What are the prompting techniques that are no longer effective?

Have you heard about the “role prompt technique” or the “one-shot prompts”?

If you’re using them…Stop! They don’t work anymore.

Sander Schulhoff, the OG prompt engineer, said these popular techniques are basically useless in 2025.

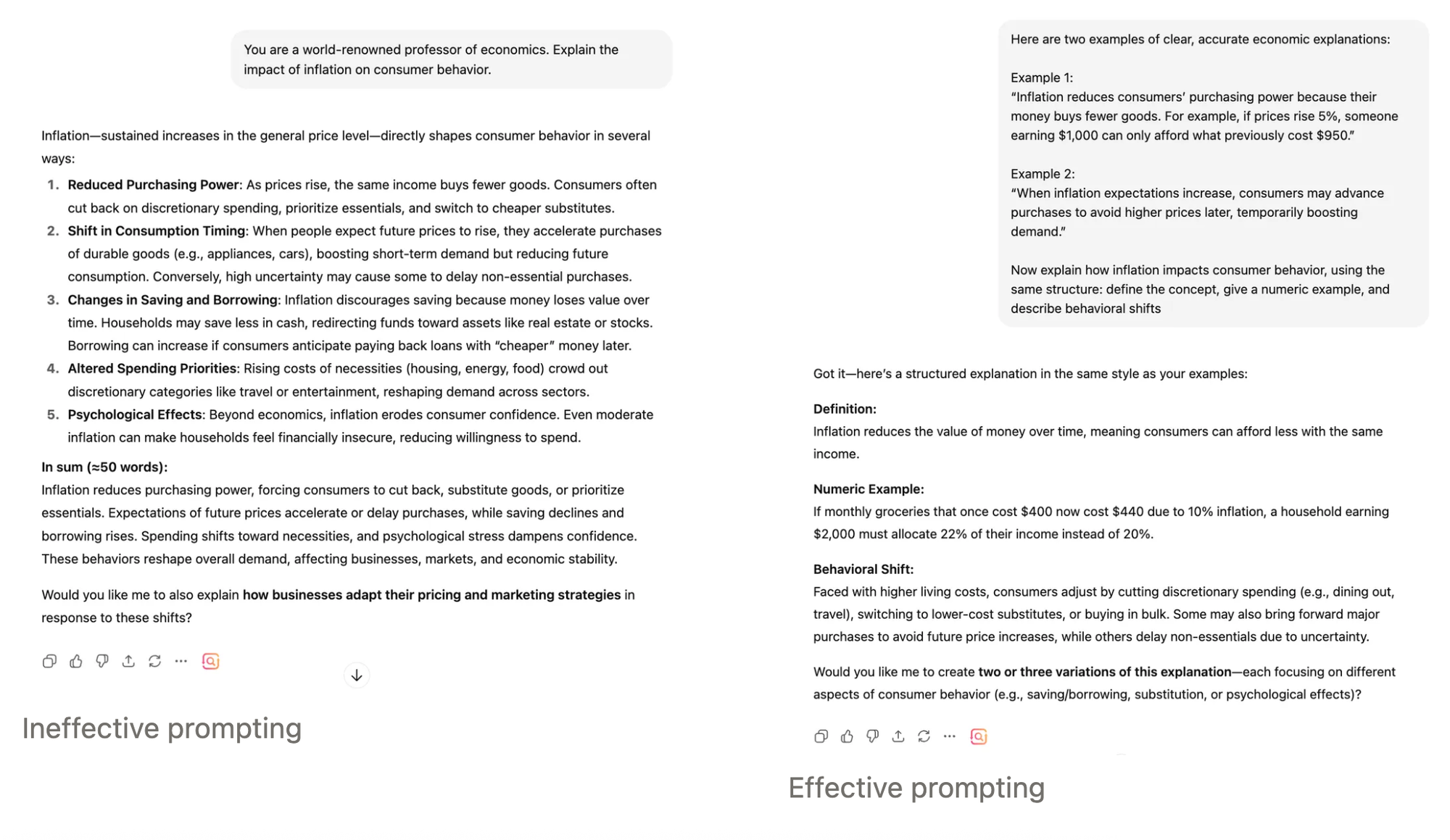

Role prompt technique

is one of the most overrated techniques for communicating with ChatGPT.

And this year, it’s no longer working.

Telling the AI…

- "You are a professor…"

- “Act like a career coach…”

- “You are a veteran researcher…”

mainly affects tone, not correctness, and has little impact on core accuracy.

Role prompts may still help with writing style, but they don't make the AI smarter or more accurate.

It's like putting a lab coat on someone and expecting them to become a doctor overnight.

You are a world-renowned professor of economics. Explain the impact of inflation on consumer behavior.

Why it fails: Labeling the model as a “professor of economics” only tweaks the tone—it doesn’t improve the accuracy or depth of the explanation.

Here are two examples of clear, accurate economic explanations:

Example 1: “Inflation reduces consumers’ purchasing power because their money buys fewer goods. For example, if prices rise 5%, someone earning $1,000 can only afford what previously cost $950.”

Example 2: “When inflation expectations increase, consumers may advance purchases to avoid higher prices later, temporarily boosting demand.”

Now explain how inflation impacts consumer behavior, using the same structure: define the concept, give a numeric example, and describe behavioral shifts.

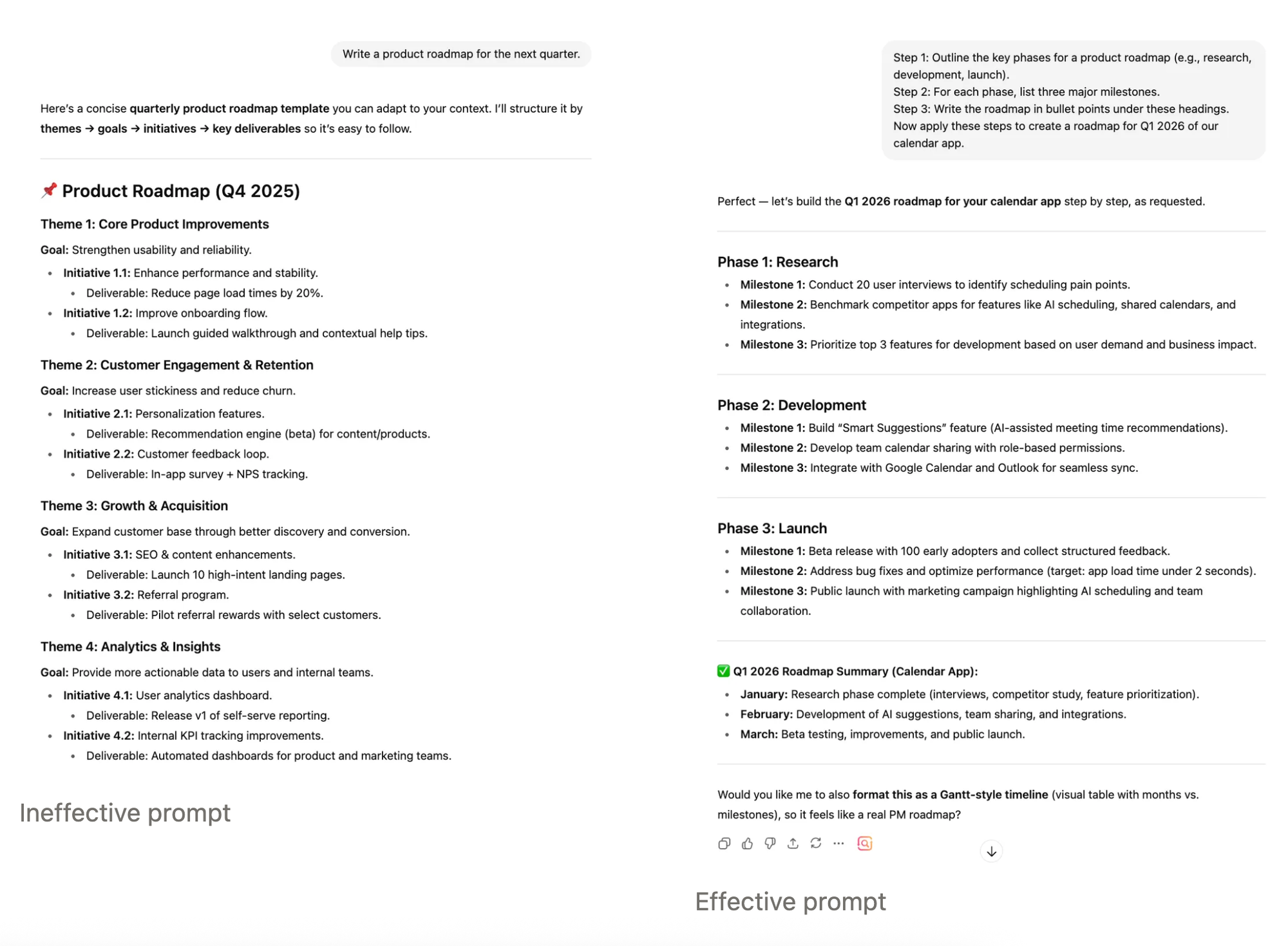

One-shot prompts (or the lazy approach)

These vague, single-example prompts often yield generic, unreliable outputs.

Think of it like giving someone one example of how to cook and expecting them to become a chef.

Write a product roadmap for the next quarter.

Why it fails: Without examples or structure, the AI’s output is likely to be vague or misaligned with your needs.

Think of it as your virtual assistant. A single-liner task is not sufficient for your assistant to understand what you really mean.

Step 1: Outline the key phases for a product roadmap (e.g., research, development, launch).

Step 2: For each phase, list three major milestones.

Step 3: Write the roadmap in bullet points under these headings.

Now apply these steps to create a roadmap for Q1 2026 of our calendar app.

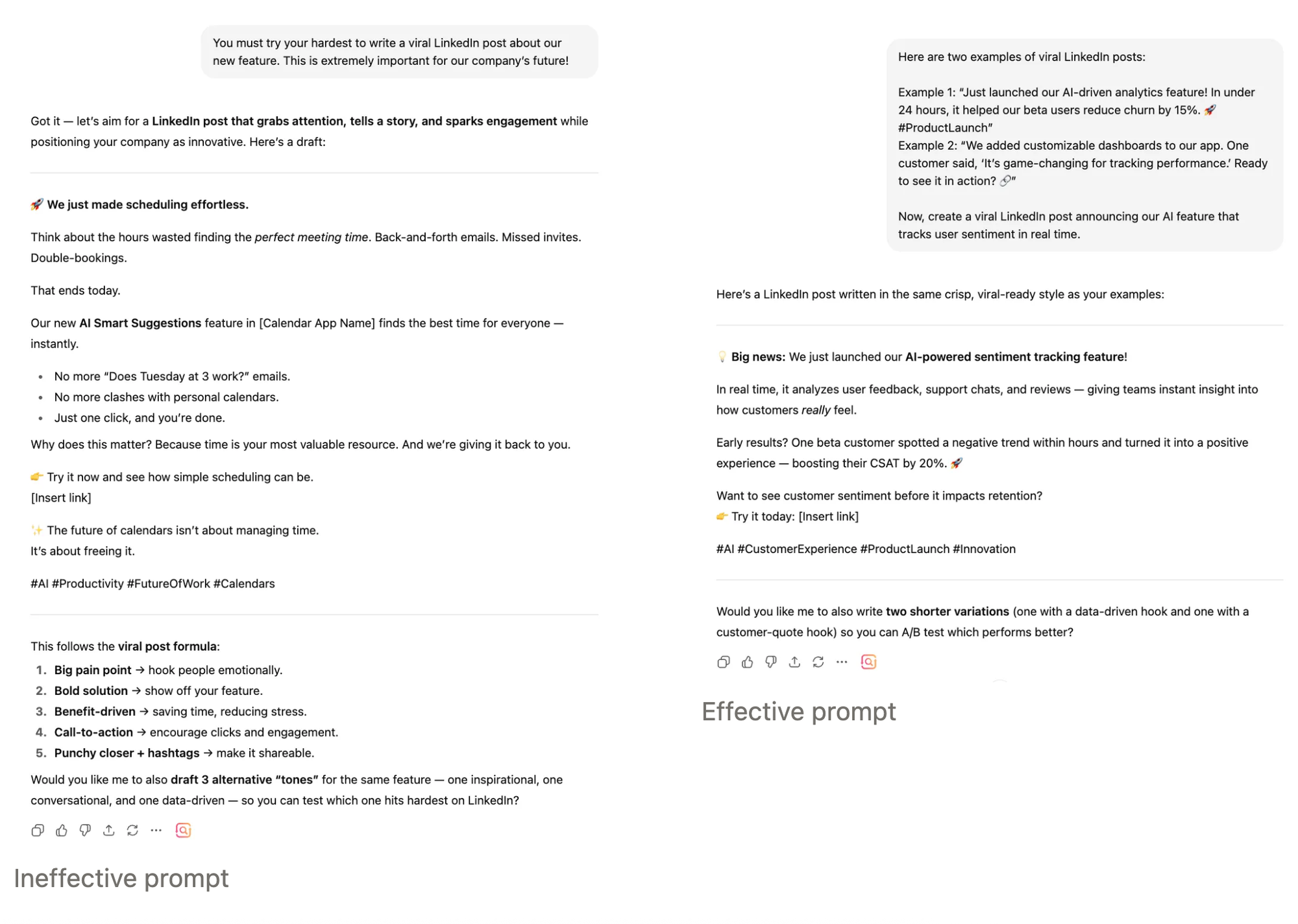

Emotional threat prompt

Phrases like…

- "You must try your hardest!"

- "This is very important!"

- “This must be perfect!”

no longer influence modern LLMs.

The AI isn't motivated by your desperation or enthusiasm. It's not human, and these emotional appeals are just wasted tokens.

You must try your hardest to write a viral LinkedIn post about our new feature. This is extremely important for our company’s future!

Why it fails: The AI doesn’t care about “trying hardest” or “company importance,” so it ignores the emotional framing and produces a generic post.

Here are two examples of viral LinkedIn posts:

Example 1: “Just launched our AI-driven analytics feature! In under 24 hours, it helped our beta users reduce churn by 15%. 🚀 #ProductLaunch”

Example 2: “We added customizable dashboards to our app. One customer said, ‘It’s game-changing for tracking performance.’ Ready to see it in action? 🔗”

Now, create a viral LinkedIn post announcing our AI feature that tracks user sentiment in real time.

What are the most effective prompt techniques that actually work?

Lenny interviewed both Sander Schulhoff and Mike Taylor, two of the most prominent names in prompt engineering.

And here are the techniques that actually work, according to them, with simple explanations and examples.

Few-shot prompting: the game changer

Do you know that this technique can improve accuracy from 0% to 90%?

Yes, you read it right. So, how do you use this technique?

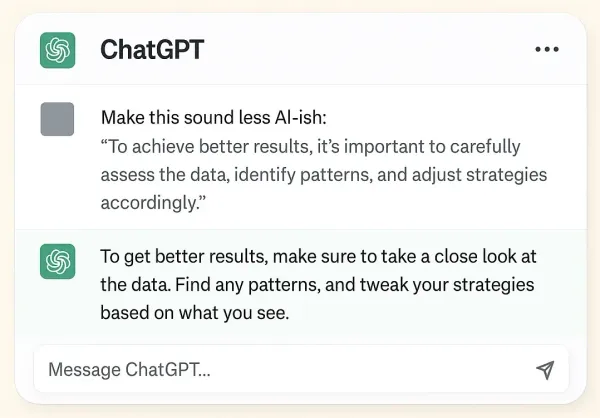

Simple: Give the AI real examples of exactly what you want. Sander shares how it works.

And if you reviewed our examples above, those are perfectly crafted prompts with real examples.

Here are examples of [task]:

Input: [example 1 input]

Output: [example 1 output]

Input: [example 2 input]

Output: [example 2 output]

Now do the same for:

Input: [your actual input]

Here are examples of turning features into benefits:

Feature: 256GB storage

Benefit: Store thousands of photos and never worry about running out of space

Feature: Waterproof design

Benefit: Take photos confidently at the beach or pool without fear

Now turn this feature into a benefit:

Feature: 12-hour battery life

Decomposition: break it down

is simply breaking down your problem or task into sub-problems. This reduces ambiguity and improves reasoning in multi-step workflows.

For example, you want the AI to help you with writing a blog. In your head, you want it to:

- research keyword,

- search and summarize the SERPs,

- create a blog outline,

- write the draft,

- add FAQs, and

- publish!

If you tell the AI all these 6 steps in one prompt, you might not get the best out of it. Decomposition involves breaking these steps into sub-steps and several prompts.

I need to create [task/problem] for X. First, help me to [first_step], then later on we will work together with the [second_step].

I need to write a blog entitled, "5 prompting techniques that work" for my website. First, help me identify the search volume for the keyword "prompting techniques" and provide secondary keywords that I can include in my blog.

Later on, we will collaborate on reviewing the SERPs and summarizing the top articles on the topic.

Self-critique: the quality control

This involves simply asking the AI to critique its own work and then implement those criticisms in its initial output.

Here it goes:

- Ask AI to give an answer.

- Ask AI to critique or find mistakes in its answer.

- Ask AI to rewrite the answer using its own feedback.

Prompt #1:

[Your request]

After providing your answer, please review it and identify any potential issues or improvements.

Prompt #2:

Great! Now rewrite your explanation using your own suggestions.

Write a product roadmap for Q1 2026. After you finish, critique your own work and suggest improvements.

Prompt #2:

Great! Now implement your own suggestions to improve your initial output.

Context expansion: feed the machine

In the world of AI, content is not the king. Context is.

By providing the AI model with a more relevant background about your subject, its performance can be drastically improved.

Include bios, research papers, or past interactions in the correct format and order.

Context: [relevant background information]

Task: [what you want done]

Output format: [how you want it formatted]

Context: Our company just launched a new time-tracking app designed for remote teams. The app integrates with Slack and provides weekly productivity reports.

Task: Please write a short announcement email to notify all employees about the launch of the time-tracking app and explain its main benefits.

Output format: 3-sentence email, using a friendly and encouraging tone.

Ensembling and thought generation

How does this work? Simple.

Run multiple prompt variations in parallel and combine the best outputs. Or ask the model to "think step-by-step" aloud.

Think through this step by step:

1. What are the key considerations?

2. What are potential approaches?

3. What's the best solution and why?

[Your actual question]

Prompt engineering cheat sheet

Here's a quick reference you can bookmark:

✅ DO:

- Provide 2-5 examples (few-shot learning)

- Break complex tasks into steps

- Ask for self-criticism

- Include relevant context

- Be specific about format and tone

❌ DON'T:

- Use role prompting for accuracy

- Add emotional threats or pressure

- Give vague, one-shot prompts

- Assume the AI knows your context

- Forgot to specify the output format

Try these techniques on your own. Here are the recommended steps:

- Pick 1-2 techniques from this list.

- Start with few-shot learning—it's the easiest to implement and often has the most significant impact.

- Show the AI exactly what you want with a couple of examples, and watch your results transform overnight.

Remember, prompting isn't just asking questions—it's designing the AI's reasoning path. Master these techniques, and you'll unlock capabilities that most people don't even know exist.

FAQs:

What are the types of prompt engineering?

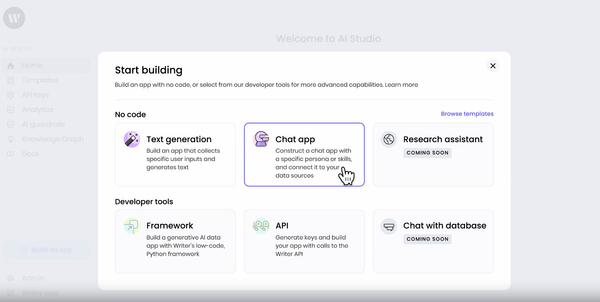

- Conversational prompting: Interactive, chat-style conversations where you can refine outputs in real-time

- Product-focused prompting: Single prompts baked into applications, executed millions of times, and treated like production code

For conversational prompting, you can be more experimental. For product prompts, you need the same rigor as software engineering—version control, testing, and monitoring.

How important is prompt engineering in 2025?

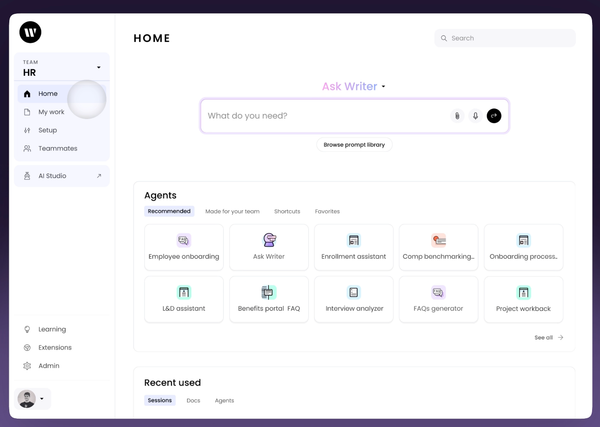

Prompt engineering is absolutely critical in 2025. Well-crafted prompts mean higher accuracy, better relevance, and fewer mistakes—especially when prompts are used in products at scale.

Companies now treat prompt engineering like software development: prompts are versioned, tested, and improved over time.

Sources: